How it works

Drizzle has been originally designed to be a thin layer on top of SQL and introduce minimal runtime overhead and by introducing Prepared Statements and Relational Queries — we’ve smashed it. It’s now both fast and has exceptional DX and no n+1 problem for relational queries.

But how fast is it? Is it Drizzle or is it SQL who’s fast? What to measure?

What is a meaningful benchmark? We’ve spent quite some time doing synthetic benchmarks with mitata, tested everything in one runtime and then in separate containerised so there’s no GC cross-influence, community made their own benchmarks and helped us allocate Relational Queries performance and row reads bottlenecks and make them really fast and efficient.

We’ve tested different SQL dialects across all the competitors, and while we were crazy fast, in some cases 100+ times faster than Prisma with SQLite, we only wanted to share benchmarks that were meaningful for businesses and developers.

From business perspective— request roundtrip is the most important metric when it comes down to the server-side performance. While you can influence network latency with services like Cloudflare Argo, on the server side it usually comes down to the database queries.

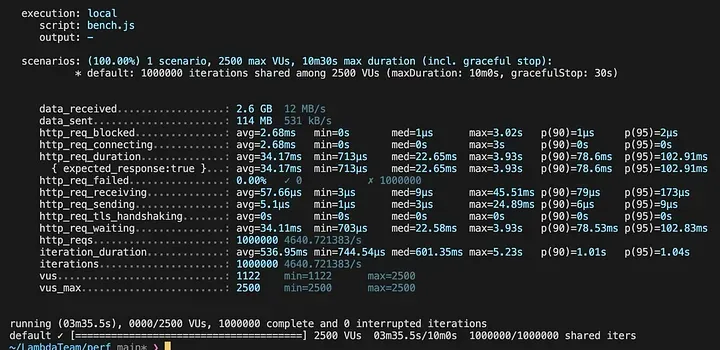

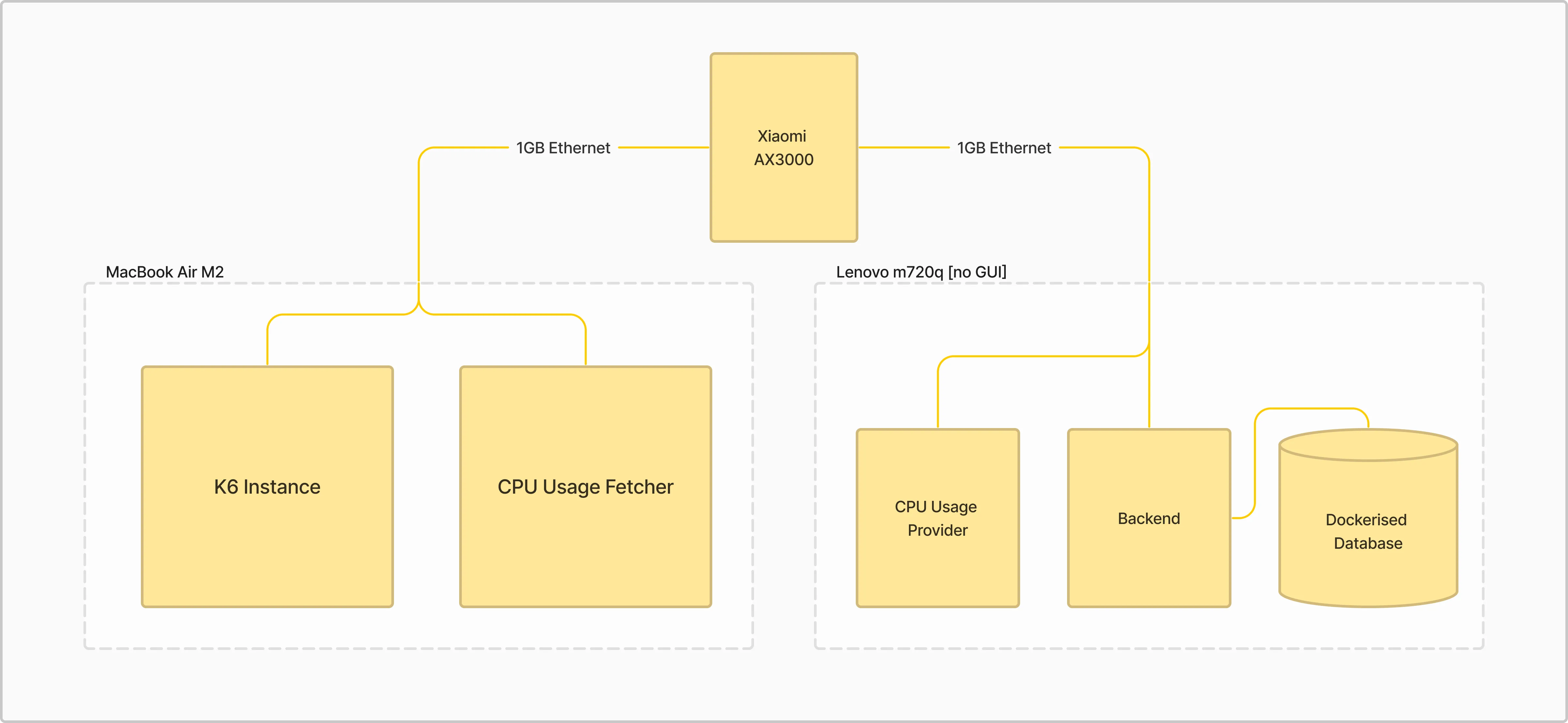

We’ve composed a test case with a ~370k records in a PostgreSQL database and generated production-like E-commerce traffic benchmarks on 1GB ethernet to eliminate any discrepancies. On Lenovo M720q Drizzle can handle 4.6k reqs/s while maintaining a ~100ms p95 latency.

We ran our benchmarks on 2 separate machines, so that observer does not influence results. For database we’re using PostgreSQL instance with 42MB of E-commerce data(~370k records).

K6 benchmarking instance lives on MacBook Air and makes 1M prepared requests through 1GB ethernet to Lenovo M720q with Intel Core i3-9100T and 32GB of RAM.

To run your own tests - follow instructions below!

Prepare test machine

- Spin up a docker container with PostgreSQL using

pnpm start:dockercommand. You can configure a desired database port in./src/docker.tsfile:

...

}

const desiredPostgresPort = 5432; // change here

main();- Update

DATABASE_URLwith allocated database port in .env file:

DATABASE_URL="postgres://postgres:postgres@localhost:5432/postgres"- Seed your database with test data using

pnpm start:seedcommand, you can change the size of the database in./src/seed.tsfile:

...

}

main("micro"); // nano | micro- Make sure you have Node version 18 installed or above. You can use

nvm use 18command - Start Drizzle/Prisma server:

## Drizzle

pnpm start:drizzle

## Prisma

pnpm prepare:prisma

pnpm start:prismaPrepare testing machine

- Generate a list of http requests with

pnpm start:generate. It will output a list of http requests to be run on the tested server |./data/requests.json - Install k6 load tester

- Run benchmarks 🚀

Use the built-in benchmark runner:

tsx bench/index --host http://192.168.31.144:3000 --name my-bench --folder results

http://192.168.31.144:3000 // drizzle

http://192.168.31.144:3001 // prisma- Prepare final combined results

After benchmarks finish, merge all outputs into a single JSON file:

tsx bench/prepare --folder results